Research & Articles

Sharing what the data shows us.

New Features: Critical Indicators & Known Exploitation Calendar Heatmap

We built critical indicators to explain the reasoning behind any CVE’s Empirical Score (0% - 100% real-world exploitation risk). Every CVE we analyze is modeled against over 2,000 data points. We took these model weight contributions and grouped them into the following categories: Chatter, Exploitation, Threat Intelligence, Vulnerability Attributes, Exploit Code, References, and Vendor.

Risk Model Slop

In cybersecurity risk scoring, “risk model slop” is the quiet but widening gap between what a probability means in a model and how vendors distort it once it leaves its original calibration.

Local Models vs Global Scores: Why Context Isn’t Enough

Context improves interpretation; local models improve decisions. If you want autonomous, defensible security actions, invest in a model that learns your environment and keeps learning as it changes.

Finding New Exploits with A Bespoke Model

“Why do we need another scoring system?” is not the best question to ask. Instead we need to get accustomed to asking about performance. This post walks through an example from our latest improvement to our exploit code classifier.

It’s Not About Making a Scoring System

“Why do we need another scoring system?” is not the best question to ask. Instead we need to get accustomed to asking about performance. This post walks through an example from our latest improvement to our exploit code classifier.

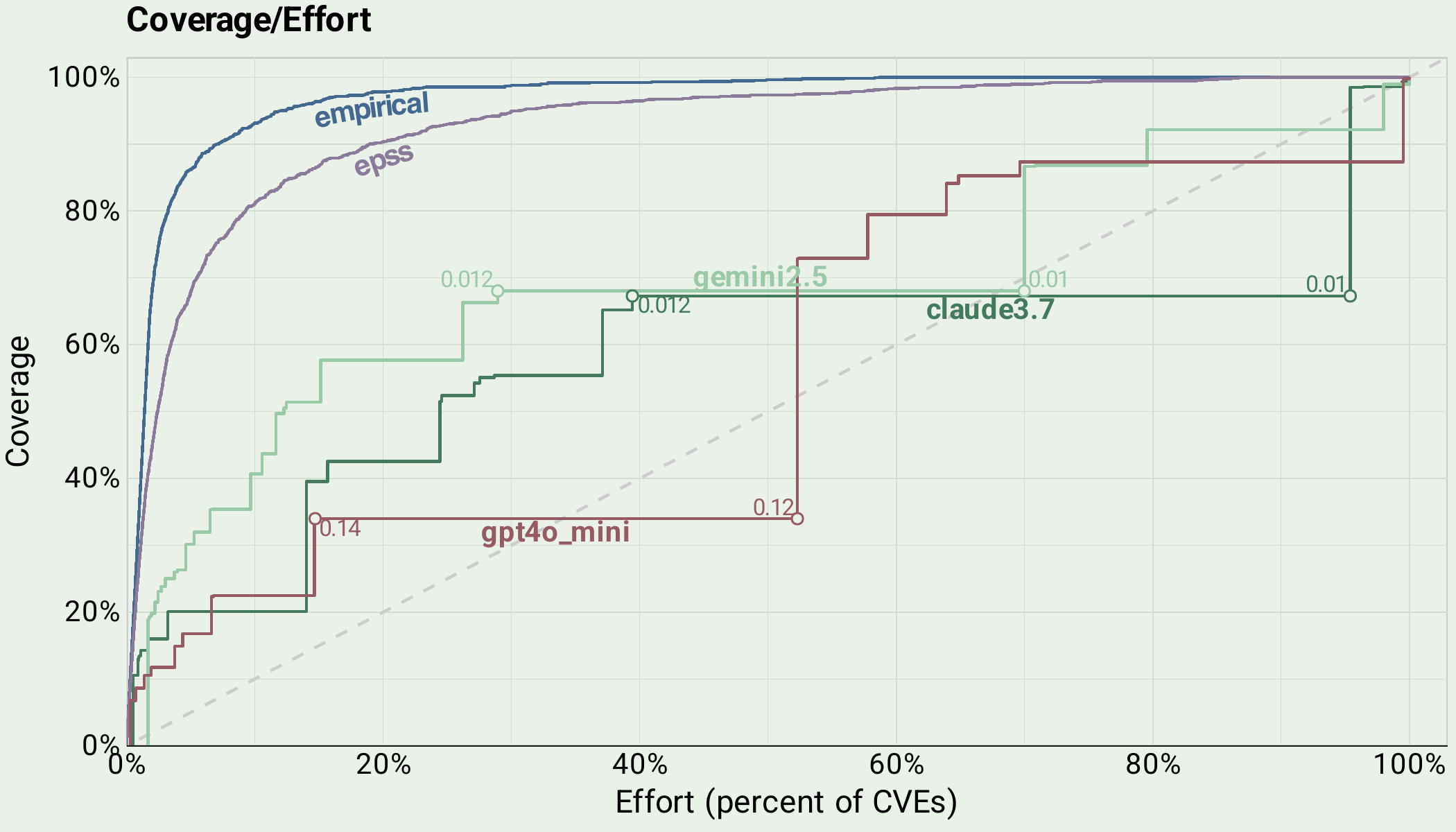

Benchmarking LLMs on the Vulnerability Prioritization Task

LLMs underperform EPSS in estimating vulnerability exploitation in the next 30 days.

Known Exploited vs Recently Exploited (series 2 of 5)

Past exploitation is not a guarantee of future exploitation. However, recent exploitation is in fact a powerful predictor of future exploitation!

Known (Re-)Exploited Vulnerabilities (series 1 of 5)

Conventional wisdom in cybersecurity tells us that if a vulnerability is known to be exploited that everyone should patch it immediately, but the reality is a lot more nuanced. Known exploited in the past does not guarantee future exploited.

Watch our co-founder speak at VulnCon 2025

Watch our co-founder Jay Jacobs present research and facilitate industry discussions at CVE Program & FIRST VulnCon 2025 in Raleigh, NC.

Only Your Data Can Truly Anticipate Threats

In cybersecurity, understanding exploitation threats hinges on the quality and source of the data analyzed. Traditionally, vulnerability management has relied heavily on secondary source data, such as Known Exploited Vulnerabilities (KEV) lists, which compile vulnerabilities based on reported incidents. While these lists provide valuable references, relying solely on them leaves significant blind spots. Secondary sources, by nature, reflect past events, often with delays and incomplete context, leading organizations to respond reactively rather than proactively.

Explore Model Thresholds

Thresholds allow security teams to filter and assess which vulnerabilities are most critical to remediate. Organizations have to make tough calls when choosing which vulnerabilities to prioritize, and thresholds allows teams to make educated decisions based off global model data.

Explore Our New API Docs

Today we publicly launched our API documentation for Global and EPSS models. Our API is the primary way users interact with our data. Creating docs from scratch is a team effort. Here’s how we managed to draft, edit, and release our docs.

Supporting EPSS: Our Vision for a More Data-Driven Future

At Empirical Security, we have known for some time now that EPSS serves as essential infrastructure within cybersecurity operations (over 100 vendors incorporate it into their products today). Our support for EPSS aligns closely with our broader vision of evolving cybersecurity tools into a more rigorous and data-driven framework. Our longstanding position has been clear: all cybersecurity tools need to become significantly more data-driven to effectively handle the complexity of current threats.